Reproducing CI Locally

Overview

This page outlines some helpful information about reproducing CI builds and tests locally.

At this time, this information only applies to conda related builds and tests.

Intended audience

Operations

Developers

Table of contents

Reproducing CI Jobs

The GitHub Actions jobs that power RAPIDS CI are simply a collection of shell scripts that are run inside of our CI containers.

This makes it easy to reproduce build and test issues from CI on local machines.

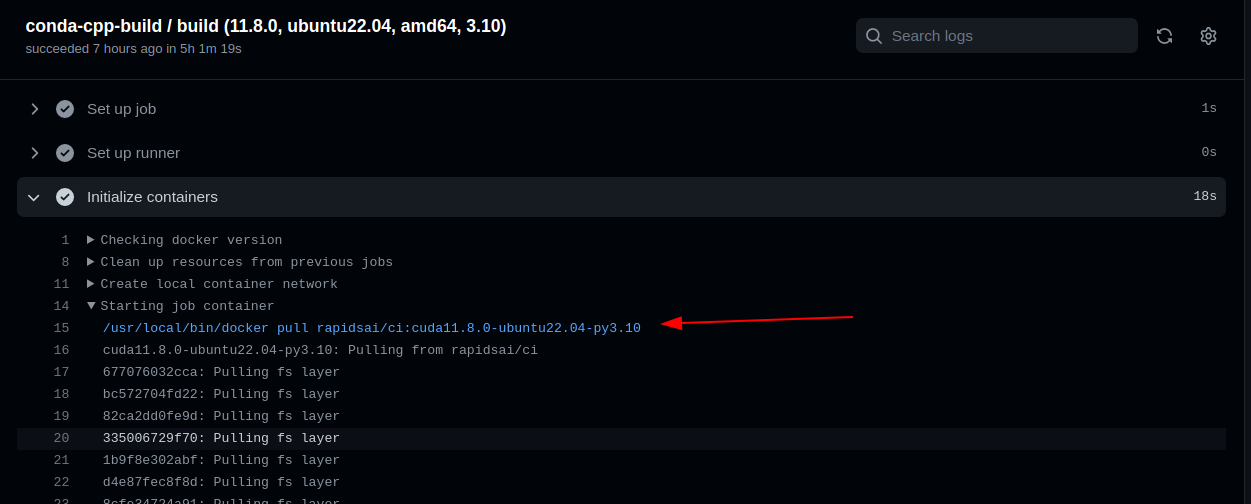

To get started, you should first identify the image that’s being used in a particular GitHub Actions job.

This can be done by inspecting the Initialize Containers step as seen in the screenshot below.

After the container image has been identified, you can volume mount your local repository into the container with the command below:

docker run \

--rm \

-it \

--gpus all \

--pull=always \

--network=host \

--volume $PWD:/repo \

--workdir /repo \

rapidsai/ci-conda:cuda11.8.0-ubuntu22.04-py3.10

Once the container has started, you can run any of the CI scripts inside of it:

# to build cpp...

./ci/build_cpp.sh

# to test cpp...

./ci/test_cpp.sh

# to build python...

./ci/build_python.sh

# to test python...

./ci/test_python.sh

# to test notebooks...

./ci/test_notebooks.sh

# to build docs...

./ci/build_docs.sh

The docker command above makes the follow assumptions:

- Your current directory is the repository that you wish to troubleshoot

- Your current directory has the same commit checked out as the pull-request whose jobs you are trying to debug

A few notes about the docker command flags:

- Most of the RAPIDS conda builds occur on machines without GPUs. Only the tests require GPUs. Therefore, you can omit the

--gpusflag when running local conda builds - The

--networkflag ensures that the container has access to the VPN connection on your host machine. VPN connectivity is required for test jobs since they need access to downloads.rapids.ai for downloading build artifacts from a particular pull-request. This flag can be omitted for build jobs

Additional Considerations

There are a few additional considerations for running CI jobs locally.

GPU Driver Versions

RAPIDS CI test jobs may run on one of many GPU driver versions. When reproducing CI test failures locally, it’s important to pay attention to both the driver version used in CI and the driver version on your local machine.

Discrepancies in these versions could lead to inconsistent test results.

You can typically find the driver version of a CI machine in its job output.

Downloading Build Artifacts for Tests

In RAPIDS CI workflows, the builds and tests occur on different machines.

Machines without GPUs are used for builds, while the tests occur on machines with GPUs.

Due to this process, the artifacts from the build jobs must be downloaded from downloads.rapids.ai in order for the test jobs to run.

In CI, this process happens transparently.

Local builds lack the context provided by the CI environment and therefore will require input from the user in order to ensure that the correct artifacts are downloaded.

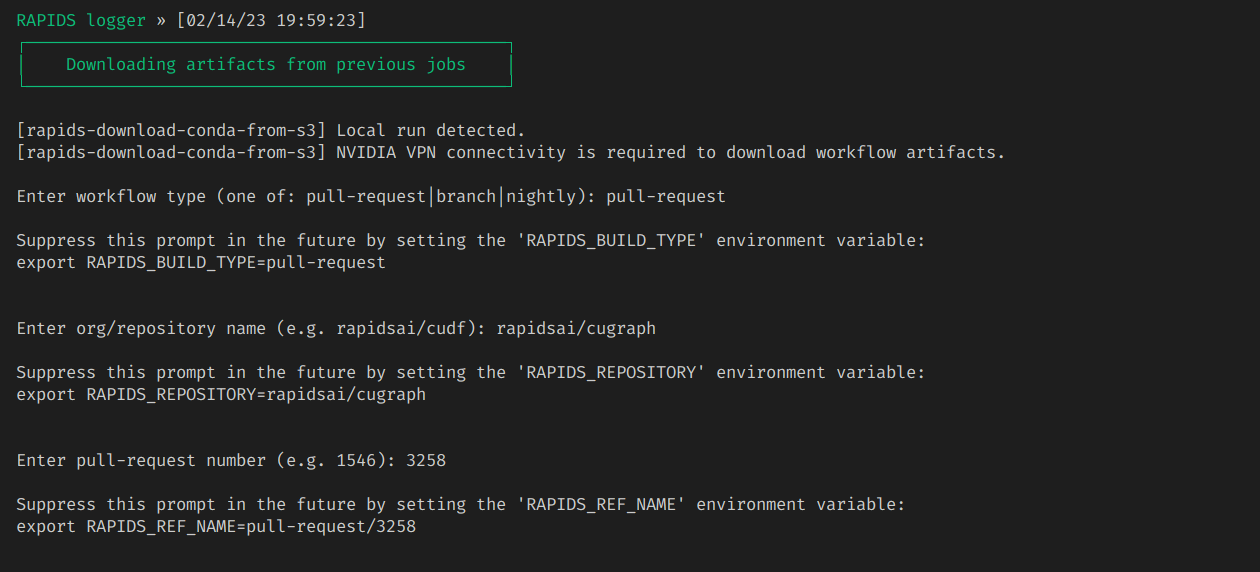

Any time the rapids-download-conda-from-s3 command (e.g. here) is encountered in a local test run, the user will be prompted for any necessary environment variables that are missing.

The screenshot below shows an example.

You can enter these values preemptively to suppress the prompts. For example:

export RAPIDS_BUILD_TYPE=pull-request # or "branch" or "nightly"

export RAPIDS_REPOSITORY=rapidsai/cugraph

export RAPIDS_REF_NAME=pull-request/3258 # use this type of value for "pull-request" builds

export RAPIDS_REF_NAME=branch-24.04 # use this type of value for "branch"/"nightly" builds

export RAPIDS_NIGHTLY_DATE=2023-06-20 # this variable is only necessary for "nightly" builds

./ci/test_python.sh

Limitations

There are a few limitations to keep in mind when running CI scripts locally.

Local Artifacts Cannot Be Uploaded

Build artifacts from local jobs cannot be uploaded to downloads.rapids.ai.

If builds are failing in CI, developers should fix the problem locally and then push their changes to a pull-request.

Then CI jobs can run and the fixed build artifacts will be made available for the test job to download and use.

To attempt a complete build and test workflow locally, you can manually update any instances of CPP_CHANNEL and PYTHON_CHANNEL that use rapids-download-conda-from-s3 (e.g. 1,2) with the value of the RAPIDS_CONDA_BLD_OUTPUT_DIR environment variable that is set in our CI images.

This value is used to set the output_folder of the .condarc file used in our CI images (see docs). Therefore, any locally built packages will end up in this directory.

For example:

# Replace all local uses of `rapids-download-conda-from-s3`

sed -ri '/rapids-download-conda-from-s3/ s/_CHANNEL=.*/_CHANNEL=${RAPIDS_CONDA_BLD_OUTPUT_DIR}/' ci/*.sh

# Run the sequence of build/test scripts

./ci/build_cpp.sh

./ci/build_python.sh

./ci/test_cpp.sh

./ci/test_python.sh

./ci/test_notebooks.sh

./ci/build_docs.sh

VPN Access

Currently, downloads.rapids.ai is only available via the NVIDIA VPN.

If you want to run any test jobs locally, you’ll need to be connected to the VPN to download CI build artifacts.

Some Builds Rely on Versioning Information in Git Tags

Some RAPIDS projects rely on versioning information stored in git tags.

For example, some use conda recipes that rely on the mechanisms described in “Git environment variables” in the conda-build docs.

When those tags are unavailable, builds might fail with errors similar to this:

Error: Failed to render jinja template in /repo/conda/recipes/libcudf/meta.yaml: ‘GIT_DESCRIBE_NUMBER’ is undefined conda returned exit code: 1

To fix that, pull the latest set of tags from the upstream repo.

For example, for cudf:

git fetch \

git@github.com:rapidsai/cudf.git \

--tags